Reconsidering Programming Efficiency Amidst Climate Change

I have a confession to make: I'm not a classically trained programmer, I'm more of a hack. I didn't go to school to learn computer science, instead, I studied civil engineering. Did I learn some programming back in those dark ages? Yes, I learned Fortran77 and promptly forgot it the moment I graduated.

Once I started working in the "real world" I picked up programming to automate various tasks. First, it was goal seek and linear regression in Excel, then it was Lisp routines for AutoCAD. Eventually, I discovered Python in the early 2010s and liked it a lot. It let me write candlestick charts and read CSV files and suddenly I felt like learning modern programming was within my grasp.

Little did I know that I'd end up using Python every day since about 2017 in the machine learning field. Python is everywhere. Nearly every data scientist or machine learning practitioner I know uses it for data munging, data science, big data analytics, and the increasing GPT stuff.

Someone once said that Python is the glue that holds the Internet together and I agree. Yet, when I look back on my career I always come back to Fortran. Not as the ideal computer language or like a Stockholm Syndrome affected victim. No, I come back to it for what I remember about it, a compiled, compact, and thrifty computer language.

Fortran 77

The goal of being thrifty with resources was first drilled into my head in Engineering school. My alma mater, NJIT, prided itself in giving all incoming freshmen a desktop computer that we had to assemble ourselves. This in the early 90s and before the Internet as we know it today.

If I remember the specs correctly, it was an i286 with two 5 1/4" floppy drives and no hard drive. All your programs had to be loaded into memory, which I don't remember how small it was, but it wasn't more than 64 kilobytes for sure.

We had to write our Fortran programs in a compact manner for our assignments that were able to be loaded directly into the onboard computer memory. Then we had to spend hours fixing the compilation errors and recompiling.

I thought it was torture compiling Fortran code but I never realized how important that that language was to numerical computing. The Python package NumPy has bits of Fortran under the hood and so does the OpenBlas library.

There's no doubt in my mind that Fortran set a wonderful foundation for our computing world. While it's not spoken about much in my circles, it remains a semi-popular programming language to this day - it's #19 on the May 2023 TIBOE index.

For example, NASA still uses Fortran90 in its NASA's Global Climate simulation modeling, and it can run on GPUs and handle parallel execution easily.

Wise resource management

After my post on GPT and asking rhetorically what the environmental footprint was of running all these GPUs building LLMs (large language models), I began to think back to my resource-thrifty Fortran programming days.

Compared to Python, Fortran is vastly more resource efficient but harder to write. It takes longer to get things done. Conversely, if you give a college graduate a laptop with a Fortran and Python environment and told them to build you something, they'll learn Python faster than Fortran and get it done faster with Python - or so I'd like to believe.

Yet, Python is often criticized as not being as resource efficient. People complain about the Global Interpreter Lock (GIL) not letting Python run in a multithreaded way. Workarounds were devised and Python projects like Dask and Cython were created to make Python more efficient. The new release of Python 3.11 is supposed to address many of these concerns, so I'm happy things are moving in the right direction.

This speed vs resource debate is one I think about a lot these days considering the effect of electrical usage and how it affects the climate. Our data centers and support systems churn through electricity in massive amounts.

...the largest data centers require more than 100 megawatts of power capacity, which is enough to power some 80,000 U.S. households, according to energy and climate think tank Energy Innovation. - via TechTarget

Further, these data center's environmental cost doesn't just include the electricity it uses for the servers but all the support systems to go into running these data centers:

While data centers have become more electrically efficient over the past decade, experts believe that electricity only accounts for around 10% of a data center's CO2 emissions, McGovern said. A data center's infrastructure, including the building and cooling systems, also produces a lot of CO2.

While some cloud providers in different regions of the world generate electricity from green/renewable resources, I can't help but wonder how much of a carbon footprint I make. How much CO2 do I generate when I'm running my projects and hare-brained ideas?

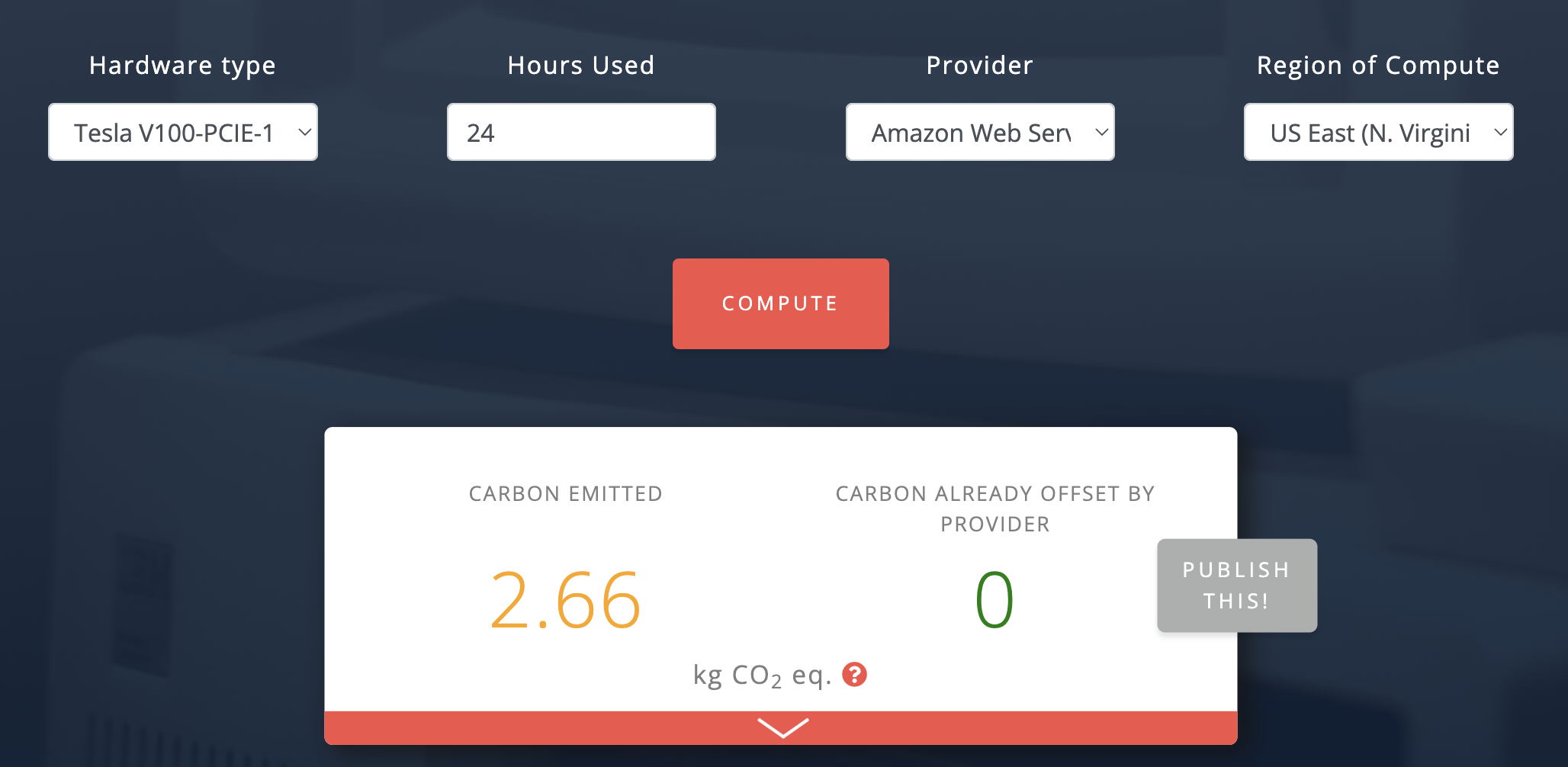

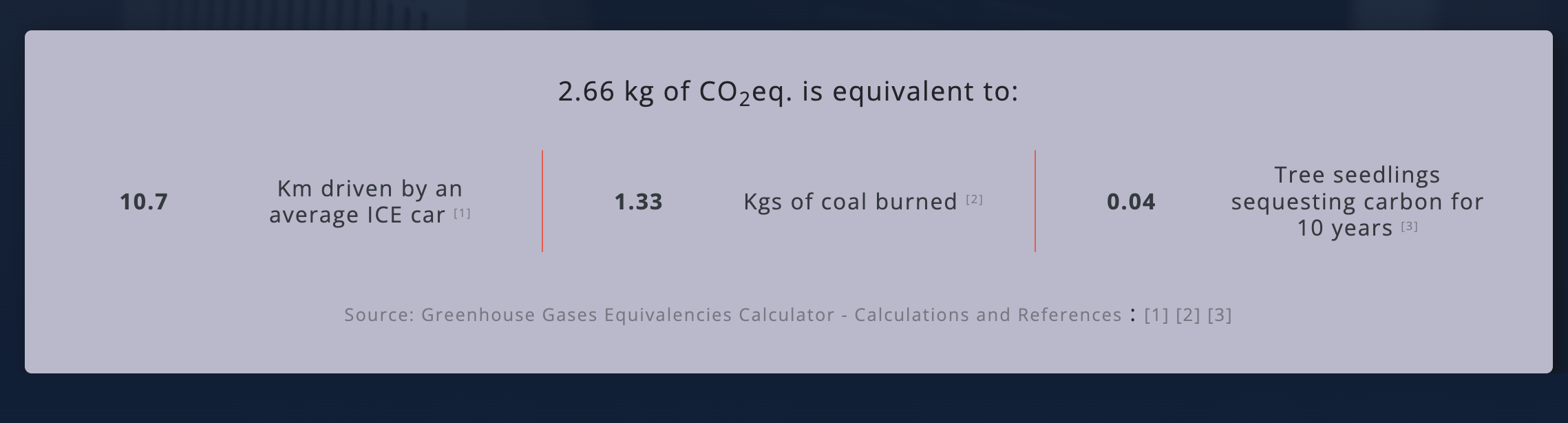

Just running a simple deep learning experiment, with a Tesla V100, over the course of 24 hours on the AWS Virginia region yields me this carbon footprint:

That's the equivalent of driving a car 10.7 km (6.6 miles).

That's just me experimenting over the course of a week. My silly Python scripts are not in production and they're not building deep-learning models on terabytes of data. I wonder just how many kilometers/miles I've "driven" in all my years of tinkering around?

I can't help but wonder how big of a carbon footprint I generated when I forgot to shut down a GPU instance overnight. How much CO2 gas was created because I wanted to squeeze out 1% more accuracy on a grid search? Just how much electricity is wasted by poorly written code (I'm looking at myself in the mirror on this one)? Just how much energy is needed to collect data that has no value?

Would carbon footprints drop if we wrote programs faster, executed them quicker, and with lower resources consumed? What if we chose to use GPUs and other chipsets wisely instead of chasing a slightly more accurate churn model, or mining a cryptocurrency?

Would it make a difference? Would we be able to offset our carbon footprints and help fight climate change?

I don't know the answer but I'm compelled to ask these questions. I was taught to be thrifty in using resources decades ago and when I look in the mirror I see myself as part of the problem. I'm failing at using resources wisely.

Faster, better, cheaper?

All those questions ruminate in my head for weeks and months sometimes. It's not until I make some connection to an article or a data point that ends up answering one question and generating two more.

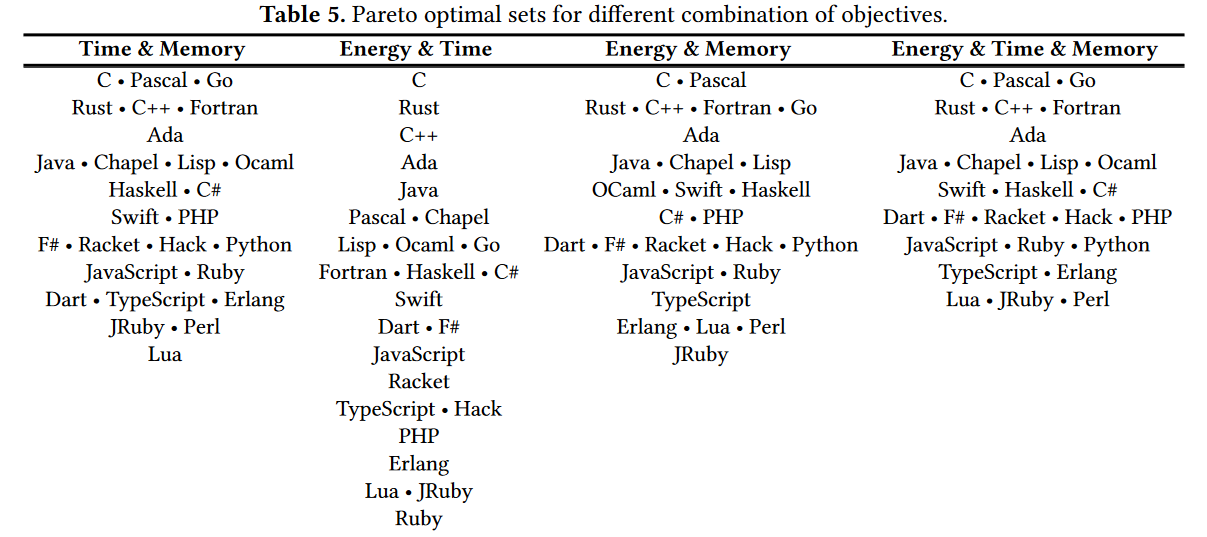

A few years ago I read a report about the energy efficiency of computer languages (updated to 2020), and that got me thinking about all the work I do in Python. It lead me back toward Fortran and made me investigate Go Language.

The report looked at three dimensions: energy, time, and memory. The study benchmarked different computer languages relative to the C language and then compared them with each other.

Of course, there is no one computer language that captures the top spot for each dimension but a crop of usual suspects kept showing up in the top five. The leading languages in the top ranks always tended to be compiled languages. They also tended to be the fastest executing ones too. Those are C, Rust, C++, ADA, and Java.

What about the slowest ones? The ones that ranked at the bottom of this benchmarking?

The five slowest languages were all interpreted: Lua, Python, Perl, Ruby and Typescript. And the five languages which consumed the most energy were also interpreted: Perl, Python, Ruby, JRuby, and Lua. - via The Newstack

There is no one perfect language but the report summarizes this important bit of information:

But there’s a lot of factors to consider. “It is clear that different programming paradigms and even languages within the same paradigm have a completely different impact on energy consumption, time, and memory,” the researchers write. Yet which one of those is most important will depend on your scenario. (Background tasks, for example, don’t always need the fastest run-time..)

And some applications require the consideration of two factors — for example, energy usage and execution time. In that case, “C is the best solution, since it is dominant in both single objectives,” the researchers write. If you’re trying to save time while using less memory, C, Pascal, and Go “are equivalent” — and the same is true if you’re watching all three variables (time, energy use, and memory use). But if you’re just trying to save energy while using less memory, your best choices are C or Pascal.

There is no one perfect language here and there are trade-offs, but compiled languages appear to be the most thrifty ones over the interpreted languages.

I know my Python friends are going will poke me and say, "I can write faster code in Python than Java any day." I agree. I agree wholeheartedly because we all need to "get shit done," but the speed at which you code is not being evaluated here.

Maybe it needs to be.

Granted the programmer in the video is an expert and can write code fast but for approachability, Python wins. Yet, in the hands of this experienced programmer, Fortran wins.

For fun, read this Insightful Reddit discussion about implementing neural networks in Fortran.

So what's it going to be? What's the answer? The answer right now is, "It depends." The choices you make will depend on what product, system, or use case you want to solve.

Resource thrift and climate change

There's a scene in Interstellar when Murphy's teacher tells Matthew McConaughey's character that the world needs farmers instead of engineers. Earth is dying because of a fungal infection that's killing the food system. Of course, the movie's message is that "technology will save the day" but believing that is the sole solution is a dangerous bet.

I loved the movie but I'm cautious about the message, we can't solely rely on technology to save the day. We're facing a climate disaster in the next few decades and we continue to pump CO2 into the atmosphere at record rates. Technology should be part of the solution and it should have a seat at the table.

As Carl Sagan once said, "The Earth is the only world known so far to harbor life. There is nowhere else, at least in the near future, to which our species could migrate. Visit, yes. Settle, not yet. Like it or not, for the moment the Earth is where we make our stand."

There is no second Earth waiting for us through a wormhole. Computers, GPUs, deep learning, LLMs, and Bitcoin aren't going away. They've been a big boon for humanity and have increased our quality of life, but at what cost? That's the answer I'm interested in knowing. What are the hidden costs for all the data centers, all our models, and all our data?

Is being thrifty with our computer-related resources and support systems a waste of time? Or should we answer this question before starting the next project? At a minimum, we need to refocus our work and projects back to thrift and wise resource management.

We need to think hard when choosing the right language for production and what data we need to store. We need to become aware of the hidden environmental footprint we all leave behind. This is where we innovators, coders, and technologists can make our stand.

Additional Reading

There's another benchmarking site for several languages that's worth checking out. Also, check out this MIT Technology Review article about how LLMs are big generators of CO2.

If you liked this article then please share it with your friends and community. If you'd like a free weekly briefing of curated links and commentary, please consider becoming a subscriber. Thank you!

Member discussion