Navigating Through the Garbage Data World

Data. The foundational block to all things machine learning, deep learning, and AI. Without data, our whole AI industry will come to a screeching halt. No more humming GPUs or Python scripts printing out performance measures or SHAP values.

It's safe to say that collecting and curating data is the cornerstone of our modern society, but what if we're accidentally poisoning it? What if we carelessly generate data as outputs that are inadvertently used as inputs for another model? What are the implications of this garbage data?

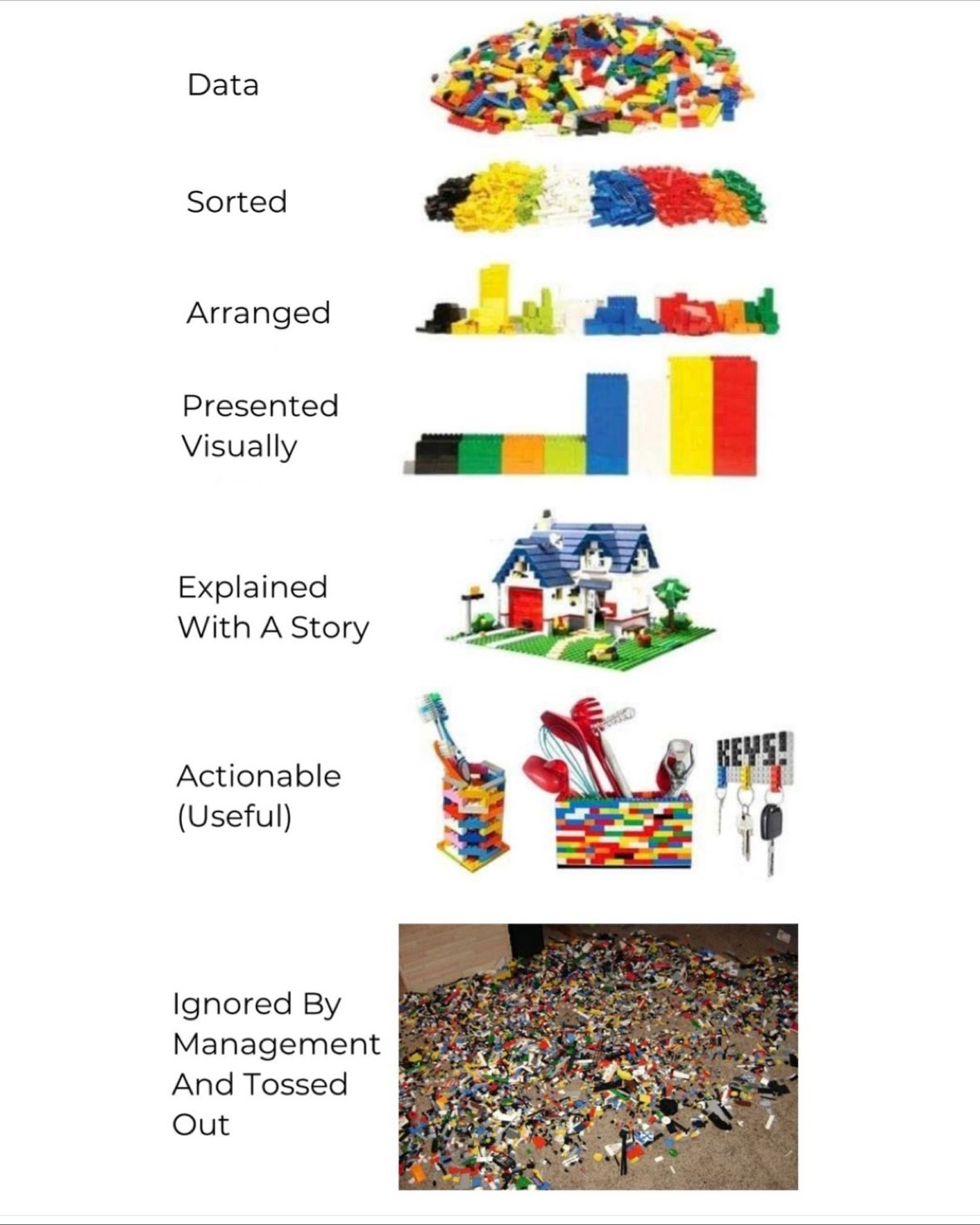

The data

Data is just a record of a transaction. Something happened on this date, at this time, for this quantity or volume. An example would be an earthquake that happened at 6 AM in Bakersfield, CA with a magnitude of 5.5 at a depth of 6 miles.

Or it could be a sales transaction like buying 100 shares of NVDA at $200 per share on August 2022 (numbers are made up). Data is simply a record of something happening with appropriate features associated with that record.

For the earthquake example, its features are date, time, depth, magnitude, and location. For the sales transaction example its stock symbol, share price, share quantity, and price paid.

After we collect enough data points (records) then we can start the process of turning them into information. Information is nothing more than the summarization of data points into something useful for the end user.

If we collect 100 earthquake records and summarize them around the location and time features, then we might see a cluster of earthquakes happening in and around Bakersfield, CA. Perhaps an early indicator of a larger earthquake that could happen.

Likewise, if we summarize the stock trade example by symbols and time, we might see traders interested in buying tech stocks vs utility stocks. More people buying NVDA than ED (Consolidated Edison).

The most important part of transforming data into information is the knowledge-creation phase. This is where we take all that new information and make connections between them to create knowledge. All that summarized data points that were transformed into information are to make knowledge.

So how does this work with AI models? Simple, we use data to build models that create outputs of statistical measures of fit and then we apply interpretability methodologies such as SHAP values to understand why the models are making decisions.

We take that raw data and feed them into the AI models that summarize and organize them. Then we train the model to learn any statistically robust patterns that we can reliably use to make predictions for some outcome.

We create a new type of information from the raw data, one that's built on algorithms and statistical measures of fit. One that's beyond summarizing transactions, one where we can say that the data shows us how it summarizes itself.

Data is the new oil

I don't like the saying that "data is the new oil." Despite my disdain for it, I tend to agree with its underlying premise. Data is the foundational block for our "knowledge" economy. The data a company or individual collects, and uses, can be its competitive advantage that dominates their particular market or industry.

The amount of data that Amazon, for example, collects, can drive price points for products all over the web. This gives Amazon an amazing competitive edge and transformed them into the juggernaut they are today.

Smart companies know that their data is their edge and their decisions should be based on what the data tells them to do.

Hence the demand for products like feature stores or big data clusters. Companies are seeking every possible avenue to squeeze out every possible ounce of information from raw data and end up collecting all kinds of data. They enrich their data with feature generation, third-party data marts, and scrapped social media data.

These companies are becoming more and more reliant on different sources of data to gain and keep their competitive advantage, and that's where the Trojan horse sneaks in.

The Trojan horse

I had a chat with a former colleague of mine. He's a top-notch data scientist at a startup we both worked at and a good friend. During our chat, he shared with me an essay on the growing problem of garbage data.

All of us in the industry and elsewhere have heard the old adage, "garbage in, garbage out" but in today's world of GPT, are we starting to poison the well?

We know that Amazon Kindle Publishing is being flooded with GPT generated books and reviews and Clarkworld, a premier science fiction literary magazine, is cutting off submissions due to AI generated garbage.

Nothing is safe anymore, even the Pentagon wasn't immune from generated fake imagery that spooked the financial markets.

Why am I highlighting these examples? Because of one important thing. These fake essays, images, books, and reviews could end up as some other AI model's training input data. It could poison the model without knowing it if we're careless with data acquisition and use.

The risk that we'll end up building models on generated "fake" data is increasing daily. It's getting harder to discern what's real anymore.

Economic moats

In MBA school we learned about barriers to entry in a market. Some of these barriers are structural barriers like economies of scale or high costs to enter. For example, starting a biotech firm carries a huge barrier to entry because of the high research and setup costs.

Other barriers are artificial and strategic, they're created by existing competitors to keep you out. These barriers range from things like loyalty schemes, branding, patents, and licensing.

Recently a memo was leaked from Google that stated: "We Have No Moat, and Neither Does OpenAI." In other words, open-source large language models (LLMs), which were once considered a competitive advantage for OpenAI, might not be the economic barrier they thought they could be.

I tend to agree with Google on this point, that open-source large language models and the ability to fine-tune them with your data will ultimately win. In my mind, the field is open to open-source LLM companies.

While LLMs are not the moat, then what is? It's the data, the same dang thing that got us into this mess in the first place.

I believe carefully curated data in a sea of GPT-generated garbage will be the key to creating and keeping a company's competitive advantage.

It's the data, stupid

It always comes back to the data. Without processes in place to verify the veracity of your data, you won't have confidence that your models will be correct. I see that this could become a very big problem that will open up new opportunities in the future.

I suspect there will be a new crop of startups that will fill the data veracity niche. They'll comb through social media data and tag suspect data. They'll have to cross-reference Tweets with true news reports. They'll build deep-learning vision models to spot fake images and remove them from future training sets.

These Startups and companies will have to create new processes and systems so they can guarantee that any data they collect and curate will be clean and true.

The future is about data quality and getting rid of the generated garbage.

If you liked this article then please share it with your friends and community. If you'd like a free weekly briefing of curated links and commentary, please consider becoming a subscriber. Thank you!

Member discussion