Navigating Through the Garbage Data World

Every day, the risk to AI grows: we’re building models on data that might not even be real. This undercuts the reliability of all our AI systems

Data. It’s the foundation for everything in AI. Without it, the whole industry grinds to a halt. No more humming GPUs. No more Python scripts spitting out numbers.

Collecting and curating data is everything now. But what if we’re poisoning the well without realizing it? The foundation of AI—reliable data—is now at risk as generated data cycles back into the system as input. This threatens the integrity of information and erodes the truth on which AI depends.

The data

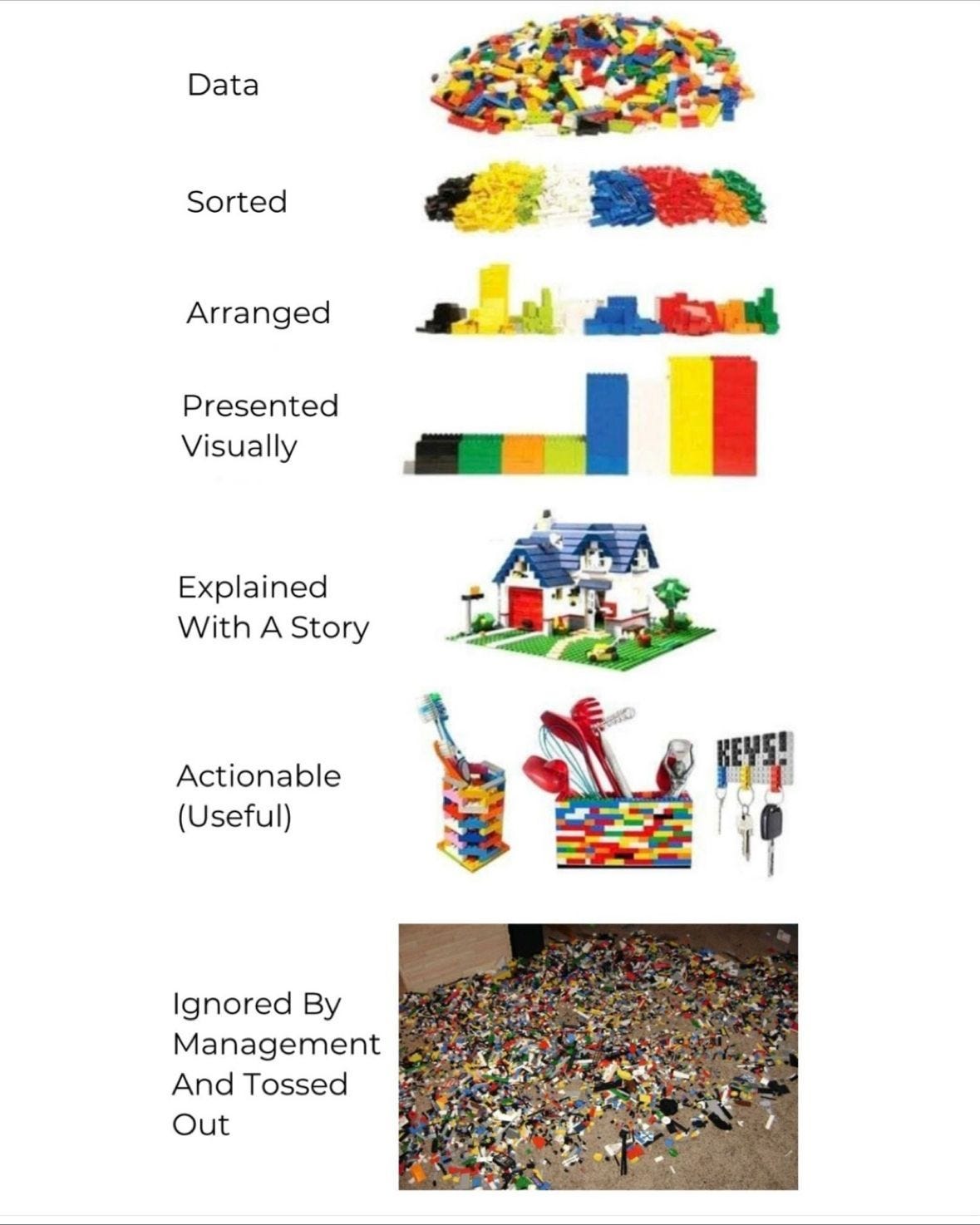

Data is just a record of a transaction. Something happened on this date, at this time, for this quantity or volume. An example would be an earthquake at 6 AM in Bakersfield, CA, with a magnitude of 5.5 at a depth of 6 miles.

Or it could be a sales transaction like buying 100 shares of NVDA at $200 per share in August 2022 (numbers are made up). Data is simply a record of something happening, with appropriate features associated with it.

For the earthquake example, its features are date, time, depth, magnitude, and location. For the sales transaction example, it’s the stock symbol, share price, share quantity, and price paid.

With enough data, we can summarize and shape it into something useful—information we can actually use.

If we collect 100 earthquake records and summarize them by location and time, we might see a cluster of earthquakes in and around Bakersfield, CA. Perhaps an early indicator of a larger earthquake.

Likewise, if we summarize the stock trade example by symbols and time, we might see traders interested in buying tech stocks vs utility stocks. More people are buying NVDA than ED (Consolidated Edison).

But the real magic happens when we connect the dots. That’s how we turn information into knowledge. It’s all about making sense of the patterns.

So how does this play out in AI? We feed the data in, build our models, and then try to figure out why the models make the choices they do.

We train our models to spot real patterns in raw data and then use those patterns to predict.

We end up with something new. Not just a summary, but a story the data tells us about itself.

Data is the new oil

I’ve never liked the phrase “data is the new oil.” But I get why people say it. Data is the foundation of the knowledge economy. The right data can give a company an edge that no one else can touch.

Look at Amazon. The mountain of data they collect lets them set prices everywhere. That’s their edge. That’s how they became a juggernaut.

Smart companies know their data is their edge, guiding decisions based on its analysis.

That’s why companies chase after feature stores and big data clusters. They want every last drop of insight from their data. They pile on features, buy third-party data, scrape social media. Anything for an edge.

But the more companies rely on outside data, the greater the risk of corrupting their foundation. This is the Trojan horse that brings contaminated data—endangering any competitive edge.

The Trojan Horse — Generative AI

I caught up with an old friend, a sharp data scientist I used to work with. He sent me an essay about the growing problem of garbage data. It hit home.

All of us in the industry and elsewhere have heard the adage, “garbage in, garbage out,” but in today’s world of GPT, are we starting to poison the well?

We know that Amazon Kindle Publishing is being flooded with GPT-generated books and reviews, and Clarkworld, a premier science fiction literary magazine, is cutting off submissions due to AI-generated garbage.

Nothing is safe. Even the Pentagon got fooled by fake images, and the markets panicked.

All this fake content—essays, images, reviews—could soon be training the next generation of AI models. Without vigilance, we risk undermining the entire field with poisoned data.

Every day, it gets harder to tell what’s real. The risk of building on fake data keeps climbing, leading to AI systems that could make incorrect choices and amplify existing issues in the real world.

Economic moats

In MBA school, we learned about barriers to market entry. Some of these barriers are structural, such as economies of scale or high entry costs. For example, starting a biotech firm poses a significant barrier to entry due to high research and setup costs.

Other barriers are artificial and strategic; they’re created by existing competitors to keep you out. These barriers include loyalty schemes, branding, patents, and licensing.

Recently, a memo was leaked from Google that stated: “We Have No Moat, and Neither Does OpenAI.” In other words, closed-source large language models (LLMs), which were once considered a competitive advantage for OpenAI, might not be the economic barrier they thought they could be.

I agree with Google here. Open-source models, tuned with your own data, are going to win. The field is wide open.

If LLMs aren’t the moat, what is? It’s the data. The same thing that started this whole mess.

In a sea of AI-generated junk, the real advantage is having clean, carefully curated data. This is the true moat: a foundation of trustworthy data that no competitor can easily replicate.

It’s the data, stupid.

It always comes back to the data. Trustworthy data is the anchor for all AI progress. If you can’t trust your data, you can’t trust your models—a core threat, but also a massive opportunity for those who solve it.

I bet we’ll see new startups pop up to tackle this. They’ll sift through social media, flag the fakes, cross-check Tweets with real news, and build models to spot fake images before they slip into training data.

They’ll have to build new systems to make sure the data they use is actually real.

The future belongs to those who can keep their data clean and free from contamination. Only then can AI fulfill its promise without being undermined from within.

Thank you for reading and supporting my humble newsletter 🙏.

You can also hit the like ❤️ button at the bottom of this email to support me or share it with a friend. It helps me a ton!